What sort of internet do we want? How do we combat disinformation? Is there a role for the government? Do corporations have responsibilities? Is it down to us, the users?

Four Internets: Data, Geopolitics, and the Governance of Cyberspace by O’Hara and Hall is a fascinating read which outlines four different models of the internet that currently exist. I think we can all learn a lot from it and it offers some interesting tools we can use regarding fighting disinformation and NAFO. It’s a very easy-to-read book, considering it was written by a philosopher and a computer expert. It’s not saying how we should run the internet, nor does it mean that people think in these terms when they engage on the internet. But it can help us consider what sort of internet we want, and consider the ways in which we should fight disinformation.

So the main four models the book outlines are the Silicon Valley Model, the Washington DC Model, the Brussels Model, and the Beijing Model. It then also has a fifth model, the Moscow spoiler model, and discusses the developing alternatives. It does not claim these are rigid, in fact, they overlap, but they are sort of archetypes we can use for shaping our considerations.

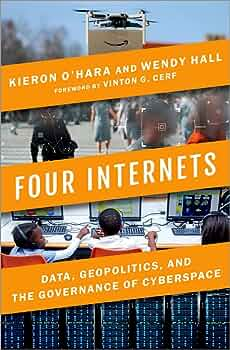

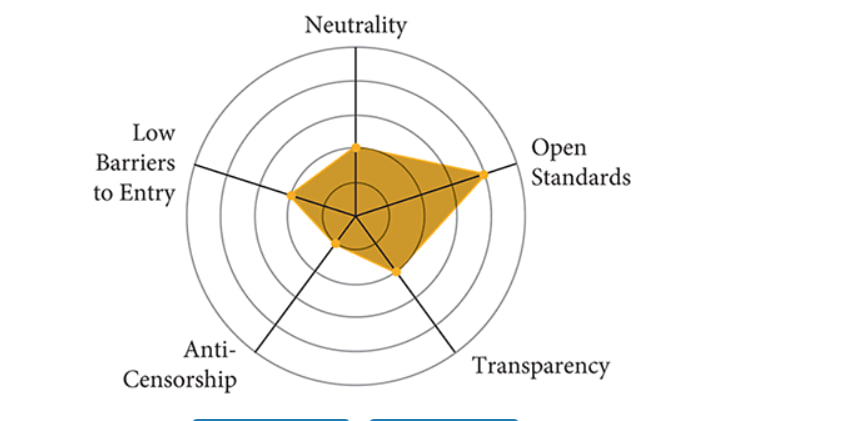

The Silicon Valley model emphasises anonymity, decentralised networks, and the free exchange of information. It can be best understood as the early days model of the internet, pared down networks, the transfer of information freely without obstacles. Low barriers, no censorship, and a neutral net that does not impede equal access are all fundamental components of this view.

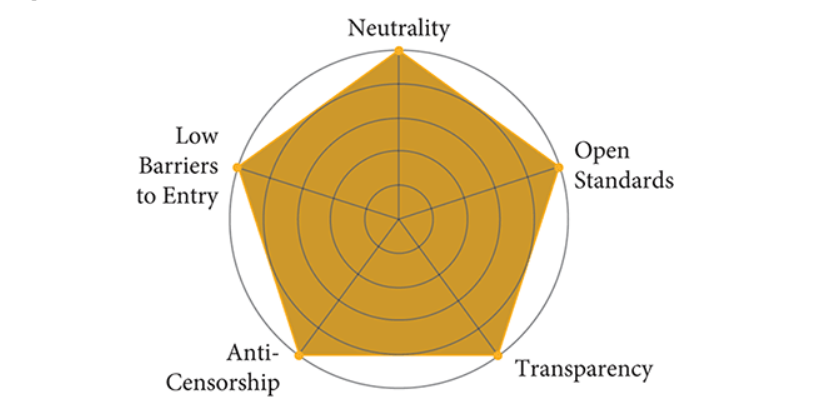

The DC model can be understood as the corporate internet, the “walled gardens”, where the internet is a space for private ownership, advertising, efficiency, and the state facilitation of this. It shares with the Silicon Valley a suspicion of too much state regulation, however, the distinction is that there is more protection of larger monopolies, and an emphasis on ownership. It also is less keen on net-neutrality, preferring to defer to the corporations than facilitate net neutrality through regulation. In many ways, early proponents of the Silicon Valley have become closer, think of Musk, Zuckerberg, etc, who have now wanted to use the government to protect their use of data and profits, even at the expense of newer disruptors.

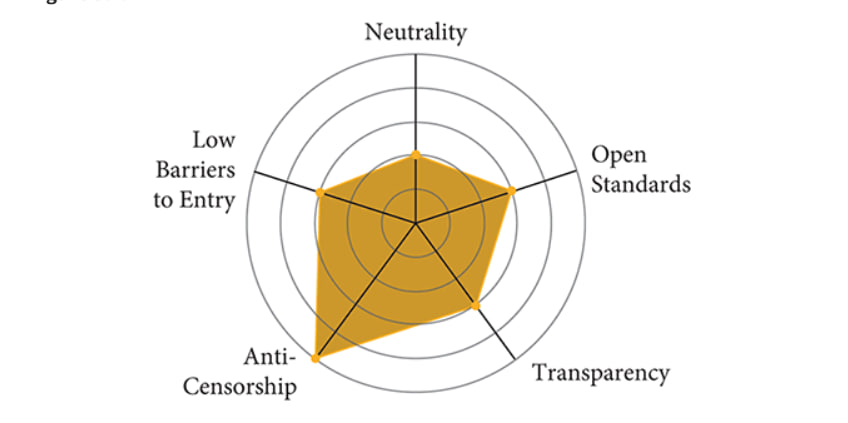

The Brussels model is all about protecting the user, as opposed to the provider, which very much fits EU regulations and principles. GDPR regulations, protecting data and privacy, and making sure that providers adhere to the rules. It does not give as much stock to individual freedom for say disinformation, scams, or hate speech, though it aims at protecting freedom from say corporations or bad actors.

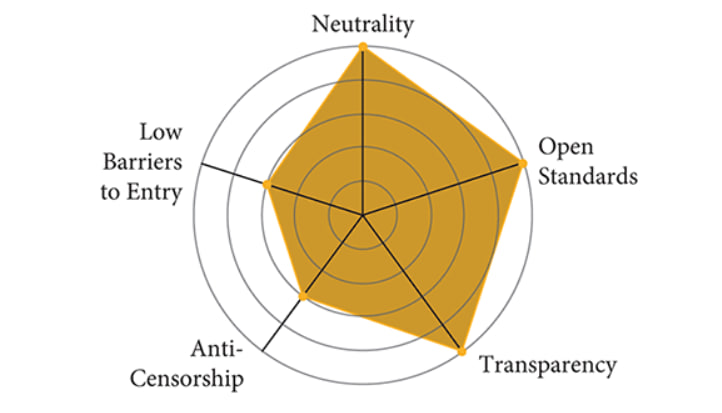

The Beijing model aims to provide order and security above all else. We see this with China obviously, where the government regulates and controls the internet to a huge degree, and aims to promote messages it likes. To an extent, combatting say COVID disinformation and promoting government advice in the West is somewhat similar, in that order and safety are prioritised. So, while we may agree that the Chinese approach is not desirable if we believe in democracy and choice, the authors claim there may be a time for more state intervention to protect people.

Now the fifth model, the Moscow Spoiler model, is all about disinformation, lies, and disrupting. It does discuss things such as hacking for good, for example Anonymous, but generally, this model is about causing chaos as much as possible. I believe if you are reading this you are all too familiar with this approach. The bots, the spread of nonsense, aiming at destablising your enemies through information. An excellent summation is in the book “Russia attacks the concept of truth itself, using the Internet to disseminate falsehood, partisanship, misdirection, and conspiracies, and as a result changing the Internet’s very nature”

I have not done the book sufficient justice but I wanted to give a broad picture of the book. I think it can and does offer valuable insight. I want to talk a bit about NAFO and how this book can help us consider future efforts in fighting disinformation, and how to do it.

First of all, let’s talk about NAFO. NAFO is fascinating because, on the one hand, it is embodying the Silicon Valley model of a decentralised network of equal exchange of individuals. I do wonder if this is in part why we have so many older folks who grew up on the earlier internet when it was like that in general though that may be because younger people don’t use Twitter as much. This brings me to the next point, we work within the spaces that emerge due to the DC model, we operate mostly within Twitter, a walled garden. We are not operating behind the scenes of the internet, we are out there for people to see within pre-built systems. Another thing is, in some ways we are fulfilling the role of the Brussels model by combatting disinformation, however, we are not the state, and we do not use state power. For some, perhaps we fill a role the state should. For others, including myself, we are a valuable part of the internet even if the state took a stronger role in combatting disinformation. This brings me to the next point.

Disinformation is never going away. It has existed throughout history, whenever new tech emerges, bad actors take advantage. The governments in the West have failed to find a way to combat disinformation without violating core commitments to free speech. Some companies took the responsbility themselves more than others. Ultimately these are companies and are free to do as they wish. However, there is a duty we could say to what is the modern public square to ensure a safe but free exchange of thought. We can justifiably say disinfo does not come under free speech. Of course, the challenge is who decides what is disinformation. A less democratic country, let’s say russia, or Turkey, may decide any opposition is disinfo and ban that or put pressure on people such as, say Musk, to combat this. What distinguishes this? Well, in part, a proper democracy, but also it is worrying to put too much power to the government, or even the corporations to uphold the principles that underpin democratic societies. We see now Musk determining what is and isn’t acceptable for a public square, for example.

If there is a duty to combat disinformation, I would say there is a role for the state to impose certain regulations, however, much of the fight against disinformation must come from education for one thing, but also, decentralised movements such as NAFO. We must continue to operate, and encourage the establishment of new movements when the challenges arise. The problem before was shouting into the wind alone, we must simultaneously uphold the Brussels model of protecting freedom through challenging nonsense and preventing genuinely dangerous speech, such as say threats, those which aid enemies of democracy, etc. but also adhere to the Silicon Valley approach of decentralisation. If NAFO became part of a government or an arm of a corporation, it would lose its strength immediately. We work because we do not work within the confines of the state. The states have failed to try to do anything, and if they do, often they can be accused, rightly or wrongly, of authoritarianism.

So the internet models can help us recognise there is a spoiler model and what can work to stop it, and we, as in society as a whole, have to think of ways to combat this. This can be using state regulation (Brussels model), putting pressure on corporations who operate social media channels to use their own power (DC model), not the government, to combat disinformation, and we must harness the power of decentralised movements (Silicon Valley model) such as NAFO and whatever awaits us in the future, to fight disinformation, and do the right thing.

Why this model helps us, is because it allows us to separate different key components that are necessary. What is the duty of the state, the policymaker, the politician, the internet provider, the social media companies, the education system, public services, etc? We can consider what sort of internet we want, we can see the deficiencies in different models and understand what world do we aim for. We can ultimately see that the solutions to combat disinformation are varied, and who is responsible also varies. If we see that we all have a role to play, and we operate in slightly different internets, and perhaps harmonise with what sort of internet we want, the future will be more positive.

Leave a Reply