AI is everywhere. Even if it’s not there, they say it is (for example, MML isn’t necessarily AI). It’s every buzzword for every company to “streamline” this or that. The tool of the lazy or overworked to churn out words, for their work, for studies, whatever. But it’s already permeated everything we hold dear. Many of us have been screaming this for ages, but it feels like it’s been falling on deaf or powerless ears. As someone interested in the philosophy of AI, there are some real dangers that cannot be repeated enough.

The latest example of the absurd use of AI is that RFK’s health report hallucinated references in a health policy. This is dangerous as it’s backing supposed facts, that will affect the policy to impact the health of millions, is based entirely on…nothing. The Trump cabinet also likely used ChatGPT for their insane tariff calculations, which were not based on anything you’d actually fix tariffs on (Though imo, tariffs are inherently disastrous for everyone, but I digress…).

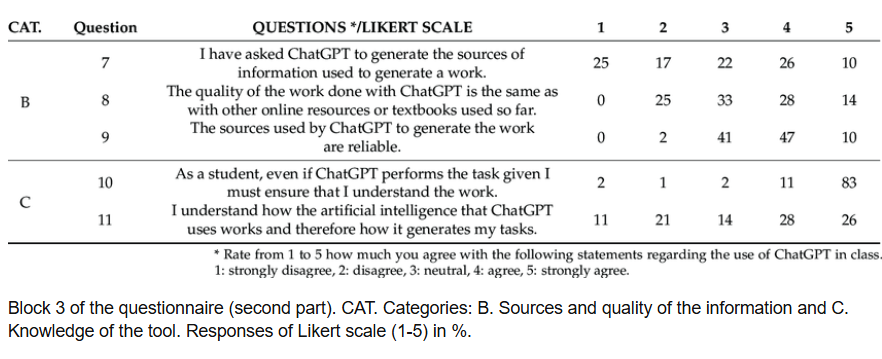

We see it in all facets of online life, for example, disinformation, AI art, and clickbait videos and posts (the AI slop on Facebook, for example). But here we see it impacting real life too. It’s not the only case. Many students at universities use ChatGPT to do their work. Whether from laziness or perhaps stress, it means they aren’t getting the full appreciation for doing your own work, for actually researching, for understanding the importance of references. They see it as a means to an end. Same as those who make AI art. No value in and of itself.

Researchers are not exempt. Academic papers have also had AI-generated references too, ones that do not exist. Journalists use it (well, hacks). Companies are replacing workers with chatbots and AI tools. Search engines are promoting their AI tools, instead of you doing the actual research, which has generated some hilarious answers, either lacking context or being just absurdly wrong. Read more here: https://onlinelibrary.wiley.com/doi/10.1002/leap.1650

It’s dangerous on a fundamental level. AI is harming our democracy by speeding up the spread of disinformation, by facilitating mistrust in what we experience, and creating further inequalities as more and more people lose jobs to companies “streamlining” themselves.

The facilitation of mistrust is an epistemological issue, as in, its affecting how we ascertain knowledge. We don’t even know what will happen. It can lead to further bubbles within bubbles of misinformation and disinformation. It is harming our ability to find and verify facts. To trust the very reality we perceive.

This isn’t to say AI cannot be good. It can be useful. But too often it has been developed by companies with no motive but profit, by developers who have implicit biases they encode into the tools, and a lack of rigorous ethical and legal standards, or a political ethos, has enabled AI to be used for the worst.

The far right LOVE AI. They can feel like they’re creative, like they’re doing something, like they’re researching, because usually, they’re actually quite dumb. It also allows them to tear down everything good about the world and replace it with their techno feudal lords. They can strip away the humanity from art.

I do not have time to go into all of this today; this is effectively just a preliminary rant for further work on this topic. But, this RFK nonsense is a tip of an iceberg, and there’s no signs of any attempts to slow things donw, because many people don’t want it to.

Leave a Reply